Unlocking High-Performance AI: How AHHA Labs Builds Smarter Datasets

By Doohee Lee, AI Researcher at AHHA Labs

When it comes to AI model performance, nothing matters more than the quality of your data. At AHHA Labs, we believe great models start long before training begins—they start with a smart data strategy. From data collection and precise labeling to performance feedback analysis, we take a data-first approach that helps our clients build robust, production-ready AI systems.

In this article, we share the principles and hands-on methods behind our dataset development process.

1. Collecting the Right Data: It’s Not About Volume—It’s About Variety

One of the biggest misconceptions in AI is that more data automatically leads to better results. In reality, model performance depends not on how much data you have, but whether your dataset reflects the full spectrum of real-world scenarios.

(1) Capture a wide range of cases

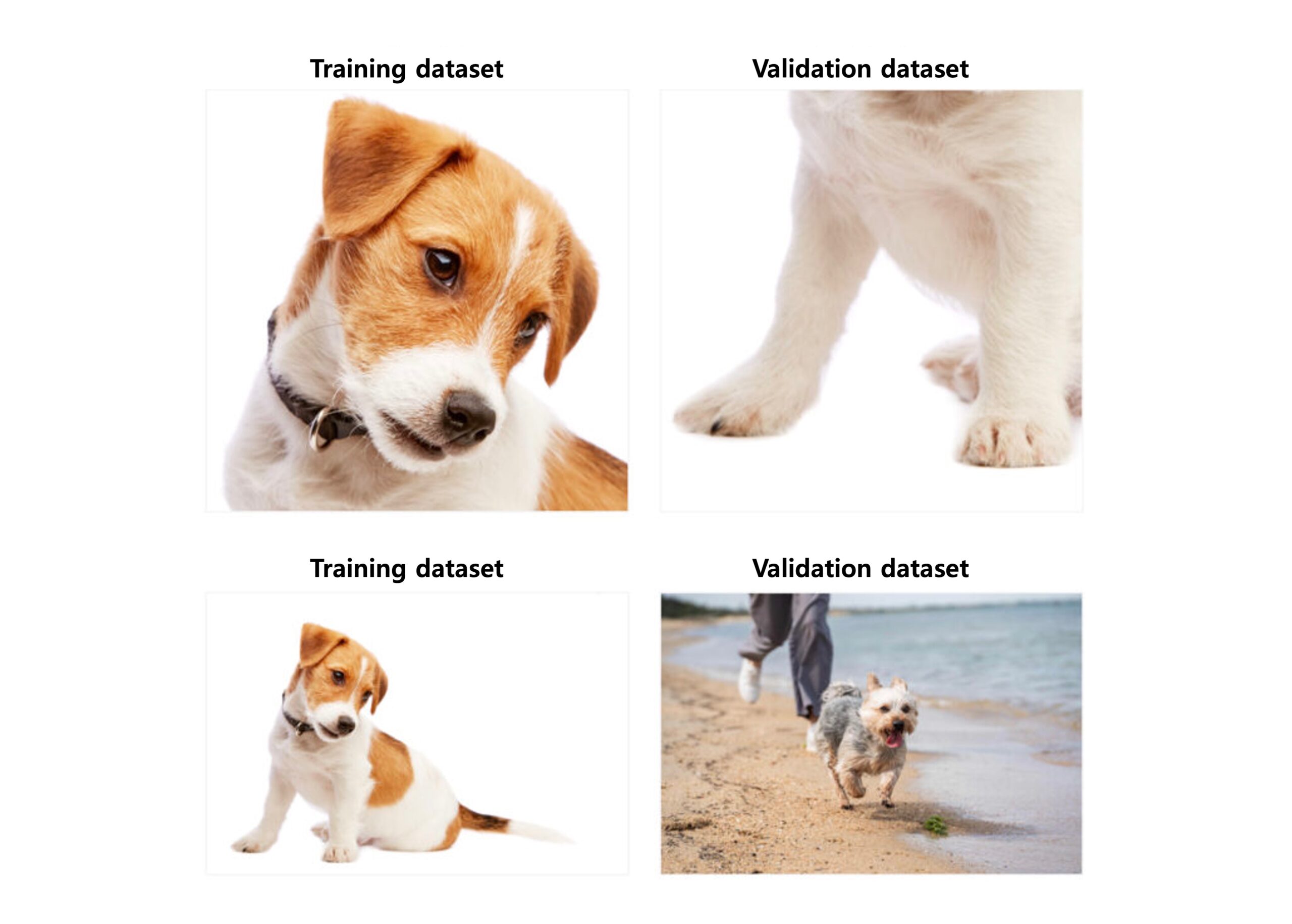

Imagine training a model to detect dogs using only images of their faces. It might completely miss a dog’s paw or tail in a test image. The same issue happens in industrial AI: a model trained only on dent defects may fail to identify scratches or contamination. That’s why we actively source data across all relevant defect types and operational variations.

Imagine training a model to detect dogs using only images of their faces. It might completely miss a dog’s paw or tail in a test image. The same issue happens in industrial AI: a model trained only on dent defects may fail to identify scratches or contamination. Image Credit: AHHA Labs

(2) Don’t ignore the edge cases

Edge cases—rare but critical scenarios—are often the reason models fail in real-world applications. These typically involve subtle defects or unusual feature combinations that weren’t present in the training data. When missing, rare, or underrepresented examples in the training data cause the model to underperform, these are referred to as “model blind spots.”

AHHA Labs uses initial model outputs to guide data gap to make sure that the edge cases are incorporated into our training process from the beginning. This strategy drastically reduces the risk of blind spots and improves the model’s ability to generalize in real-world scenarios.

🚀At AHHA Labs, we don’t just collect large volumes of data—we focus on selecting the right data that enables effective learning and generalization. By sourcing diverse cases and analyzing gaps in the dataset, we ensure the model is equipped to solve real-world problems.

✅ Curate diverse examples across defect types (dents, scratches, contamination)

✅ Use augmentation strategically to simulate variations

✅ Continuously enrich datasets by uncovering and addressing model blind spots

2. Designing High-Quality Datasets That Reflect Real-World Environments

One of the most critical factors in AI model development is the consistency between the training dataset and the evaluation dataset. Misalignment between the two can significantly degrade model performance.

(1) Shape variations matter

Even slight differences in the shape of the object can impact prediction accuracy. For example, if the training data includes only round dent defects, but the evaluation dataset contains elongated oval dents, the model will likely fail to recognize the oval dents.

(2) Class differences matter

Class diversity also plays a major role. If a model is trained only on images of a dog’s face, it may fail to recognize a dog’s paw during evaluation. One might think that this must be common sense, but the reality is not always as easy as distinguishing faces from paws. Certain defects like dents and scratches might be hard to tell apart visually and the model could end up with not enough training data for dents, for instance, because what appeared to be dents were in fact, scratches. This is a fairly common pitfall in creating datasets for industrial AI applications in real life.

Class diversity also plays a major role. If a model is trained only on images of a dog’s face, it may fail to recognize a dog’s paw during evaluation. Image Credit: AHHA Labs

🚀 At AHHA Labs, we help minimize the gap between training and evaluation dataset—so our clients get AI models that perform reliably in real-world environments.

✅ Partner with clients to build datasets grounded in real-world applications.

✅ Analyze and adjust for differences in object size, shape, location, and background

✅ Ensure a broad range of normal samples to reduce false positives for anomaly detection

3. Labeling with Precision: Clear Guidelines, Consistent Results

AI models can only learn what we teach them—and labeling is how we teach them. Inconsistent labeling is one of the biggest sources of error, especially in industrial AI where visual ambiguity is prevalent.

We often see cases where even human inspectors disagree—what one calls a “minor scratch,” another calls “normal.” That’s why AHHA Labs builds clear, context-specific labeling guides with each client.

🚀 Backed by extensive field experience in industrial data and AI modeling, AHHA Labs works closely with clients to develop accurate and reliable labeling guidelines.

✅ Standardize definitions for defect types and extent of defects

✅ Determine the most optimal labeling for ambiguous cases such as overlapping objects

✅ Provide labeling guide specific to respective industry vertical

4. Tuning Training Parameters – Strategies for Applying Effective Data Augmentation

To improve AI model performance, it’s essential to apply data augmentation effectively. At AHHA Labs, we leverage a variety of augmentation techniques to help models maintain high accuracy across a wide range of real-world conditions.

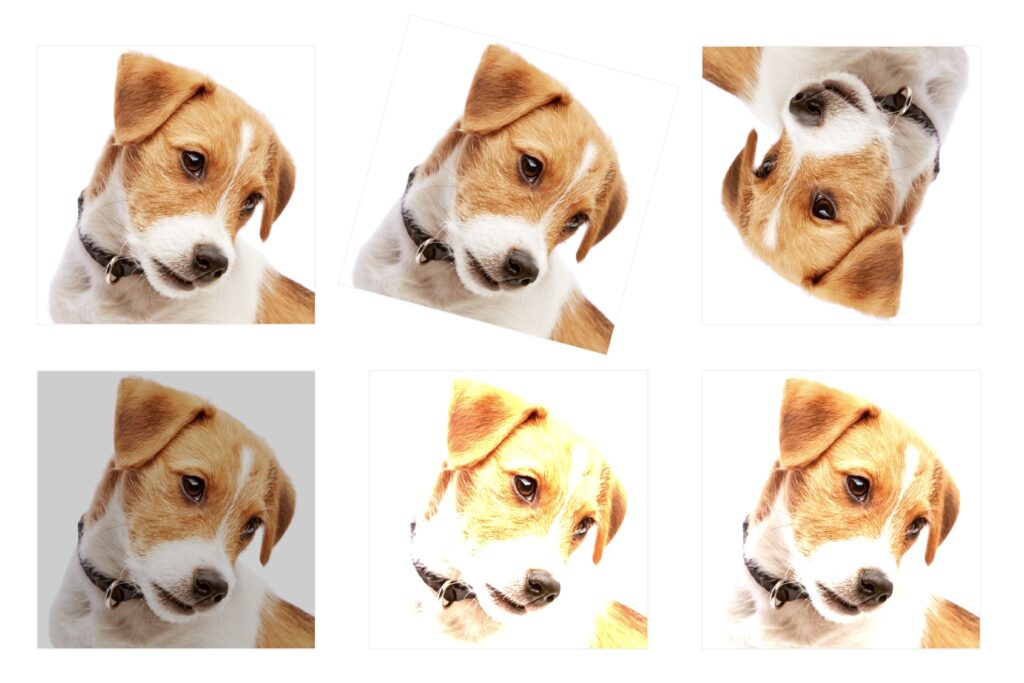

(1) Geometric transformations

These are particularly useful for teaching the model to understand the directionality of defects. For instance, if a model is only trained on scratches that run top to bottom, it may fail to detect scratches running diagonally. By applying horizontal and vertical flips, the model learns to recognize patterns regardless of orientation. Rotation techniques further ensure that the model doesn’t become dependent on a single angle of appearance.

(2) Scale transformations

A model trained only on large objects—say, large dogs—may struggle to detect smaller ones. For example, resizing an image from 420×420 to 1024×1024 increases pixel resolution for small objects, making them easier for the model to recognize. Proper scaling ensures performance is consistent across object sizes.

(3) Brightness and contrast adjustment

In dynamic production settings, lighting conditions may shift due to moving operators, machinery, or environmental changes. Shadows and reflections can degrade model performance unless accounted for during training. Adjusting brightness and contrast in the training dataset strengthens the model’s resilience to real-world lighting variability.

To improve AI model performance, it’s essential to apply data augmentation effectively. At AHHA Labs, we leverage a variety of augmentation techniques to help models maintain high accuracy across a wide range of real-world conditions. Image Credit: AHHA Labs

🚀 At AHHA Labs, we tailor augmentation strategies to match each client’s operational environment.

✅ We don’t apply augmentations at random—instead, we analyze evaluation dataset to select only what’s necessary

✅ We apply diverse transformations to prevent the model from overfitting to specific patterns

✅ We design datasets that reflect lighting shifts, scale variations, and other challenges found in real-world industrial settings

Build High-Performance AI Models with AHHA Labs

At AHHA Labs, we work hand-in-hand with clients to collect, analyze, and optimize data for maximum AI performance. From dataset development to model fine-tuning, we tailor every step to your specific needs—ensuring reliable results in real-world environments.

If you’re looking to build high-quality datasets and unlock the full potential of your AI models, partner with AHHA Labs to bring your AI vision to life!